The camera will likely move between images, so we need to align

correspoiding pixels in the different images.

The mean threshold bitmap is one method to align two images:

- Find the median pixel intensity in each image. Use that

as threshold to create a binary image.

- Measure the XOR difference between the two images.

- Shift one image to minimize the XOR difference.

Variants can use gradient descent and multiscale techniques.

This method isnot sensitive to exposure or noise because the

median is the same at different exposures.

This method is independent of camera response, so it can

be used to calculate the response curve.

This method is bad if many pixels are near the median. To avoid

this, exclude pixels near the median.

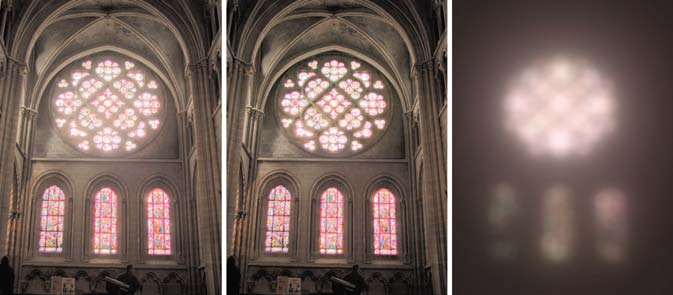

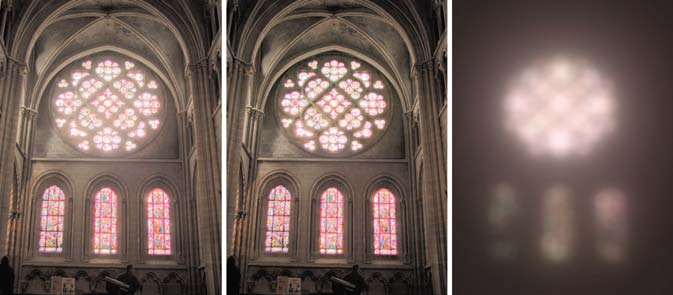

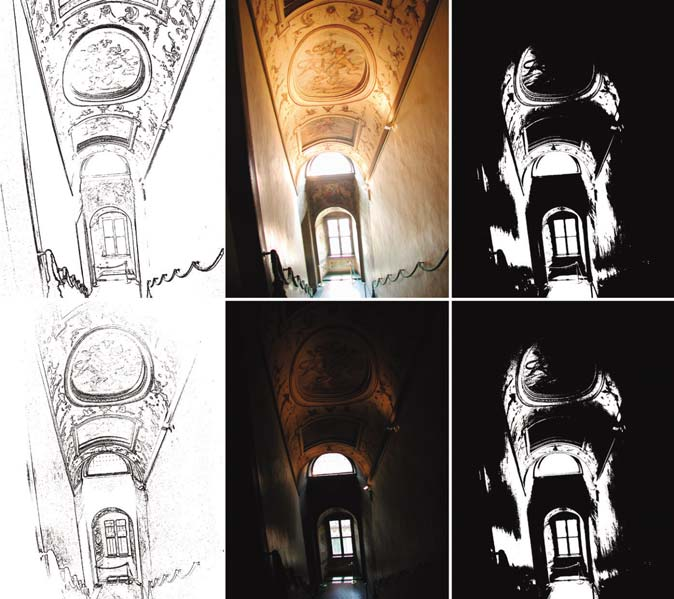

Below, the middle images are misaligned and at different

exposures. The left image shows the edge bitmap, which

is bad for alignment as different edges are visible at different

EVs. The right image shows the mean threshold bitmap.

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

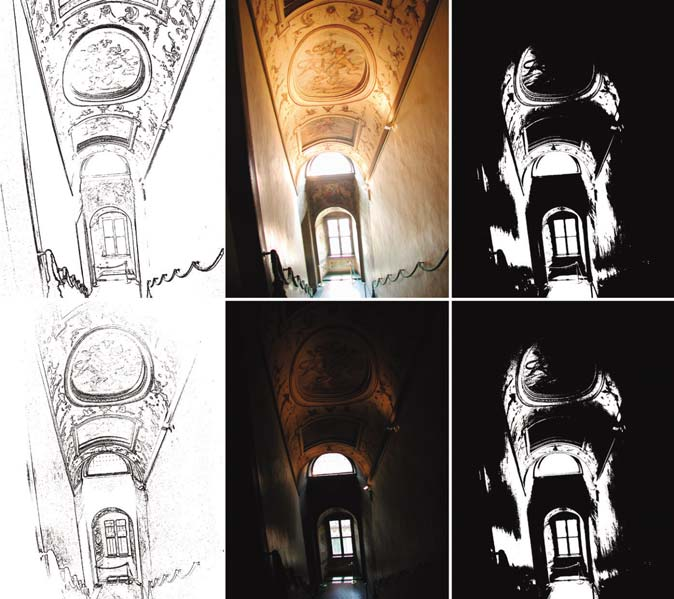

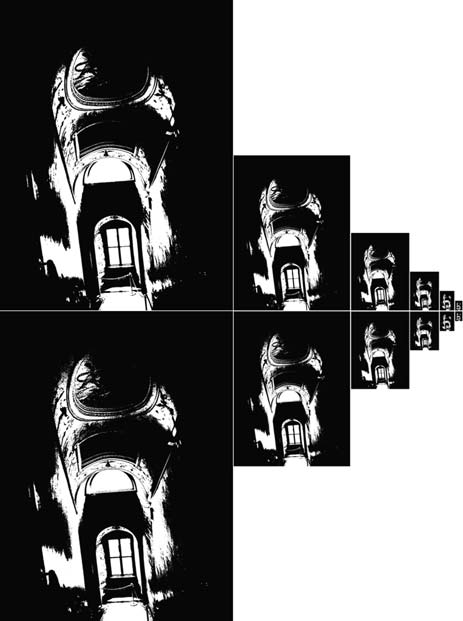

Below, is a multiscale respresentation of each image. Alignment

is done quickly at the coarsest scale, then finer and finer scales

are used.

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

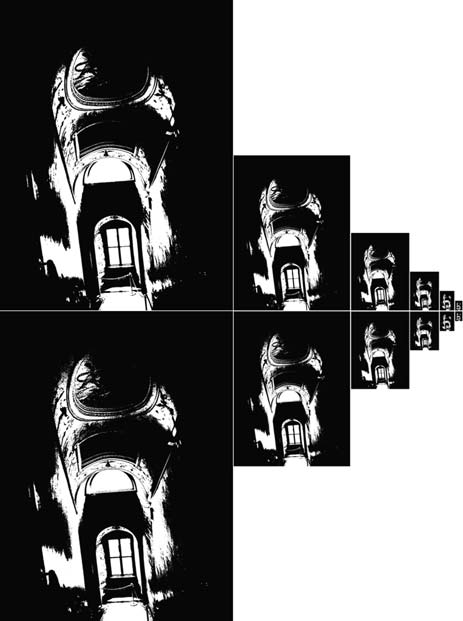

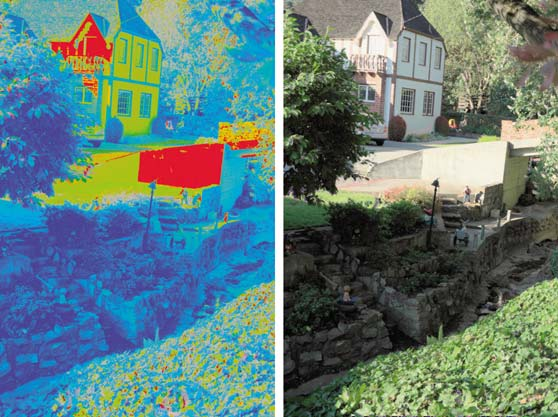

Below, the left image is composited from unaligned images, while

the right image is composited from mean-threshold-bitmap aligned

images.

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

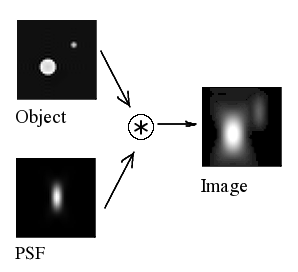

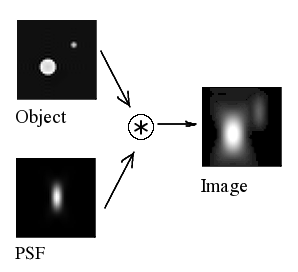

Lens flare is an area of the image near a bright spot that also gets brighter.

This occurs due to light scattering within the lens.

The flare can be defined by point-spread-function (PSF), which

is the amount of light at each point in the image due to a point

light source (ideally a radially symmetric PSF, but this doesn't

happen in reality).

[Wikipedia]

[Wikipedia]

Lens flare can be removed by estimating PSF around the

brightest pixels of a reduced-resolution HDR image

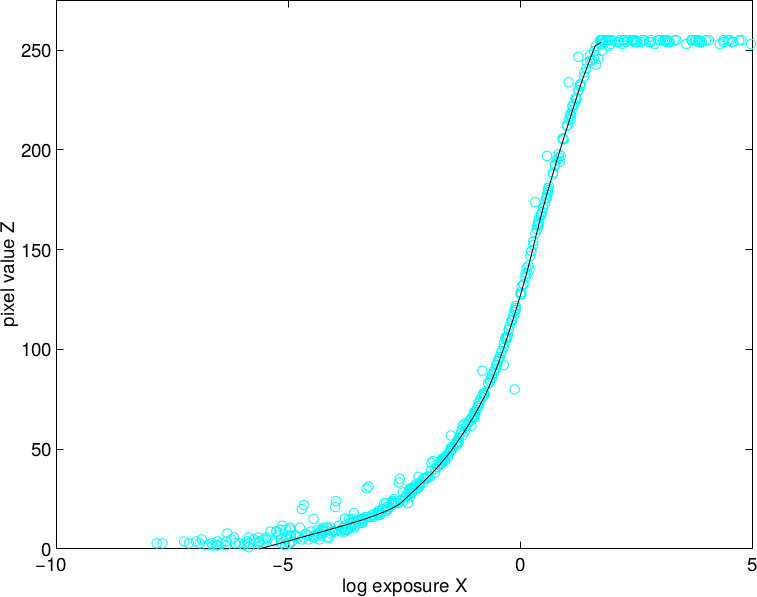

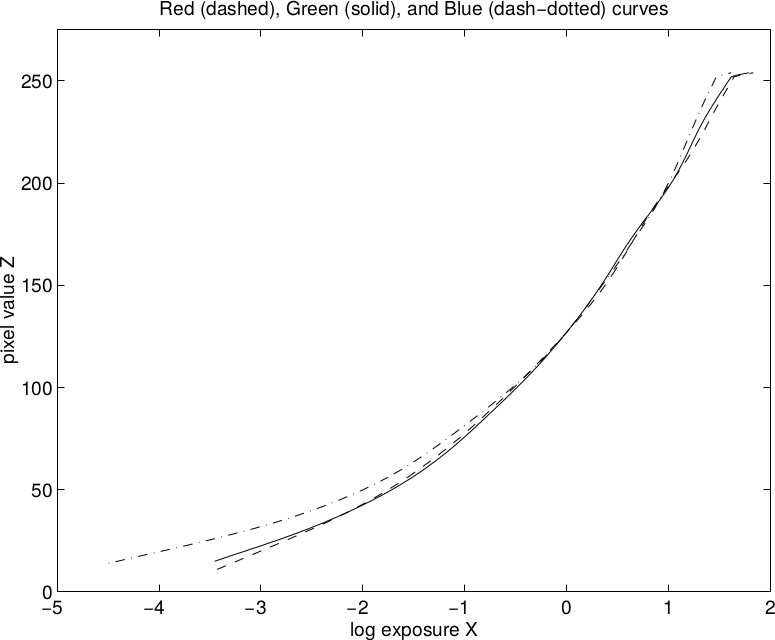

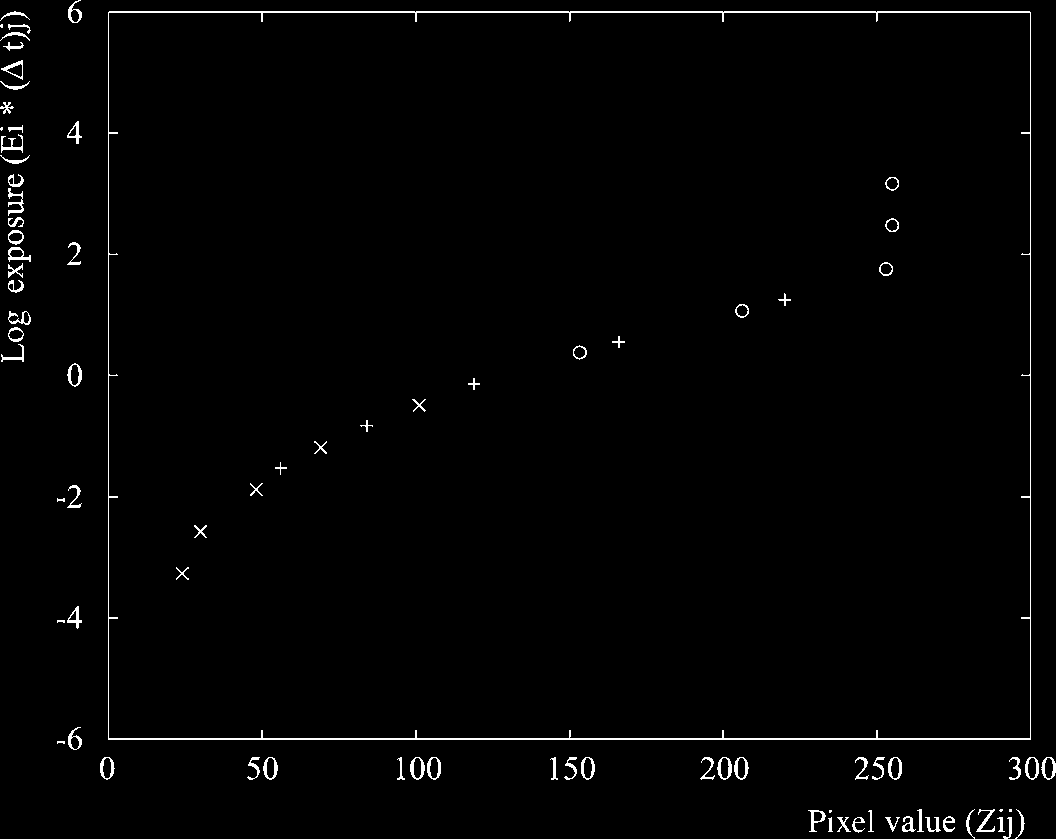

[Debevec & Malik 97]

[Debevec & Malik 97]

[Debevec & Malik 97]

[Debevec & Malik 97]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[ Reinhard, Pattanaik, Ward, Debevec 2006 ]

[Wikipedia]

[Wikipedia]